We are a group of particle physicists interested in learning the nature of elementary particles, the building blocks of matters in the space, and its implication to our understanding about our universe. Currently we are interested in a particular type of detectors called time projection chambers (TPCs). A TPC is essentially a "camera" which allows 2D or 3D "imaging" of charged particle trajectories at a few millimeter scale spatial resolution with calorimetry (energy deposition as a particle traverses in the detector) information. This detailed image data can tell us more about the physics nature of particles than other classic particle detectors and shed light to discovering new physics phenomenon never seen before.

Our research focus is to leverage ML techniques for TPC data reconstruction and analysis.

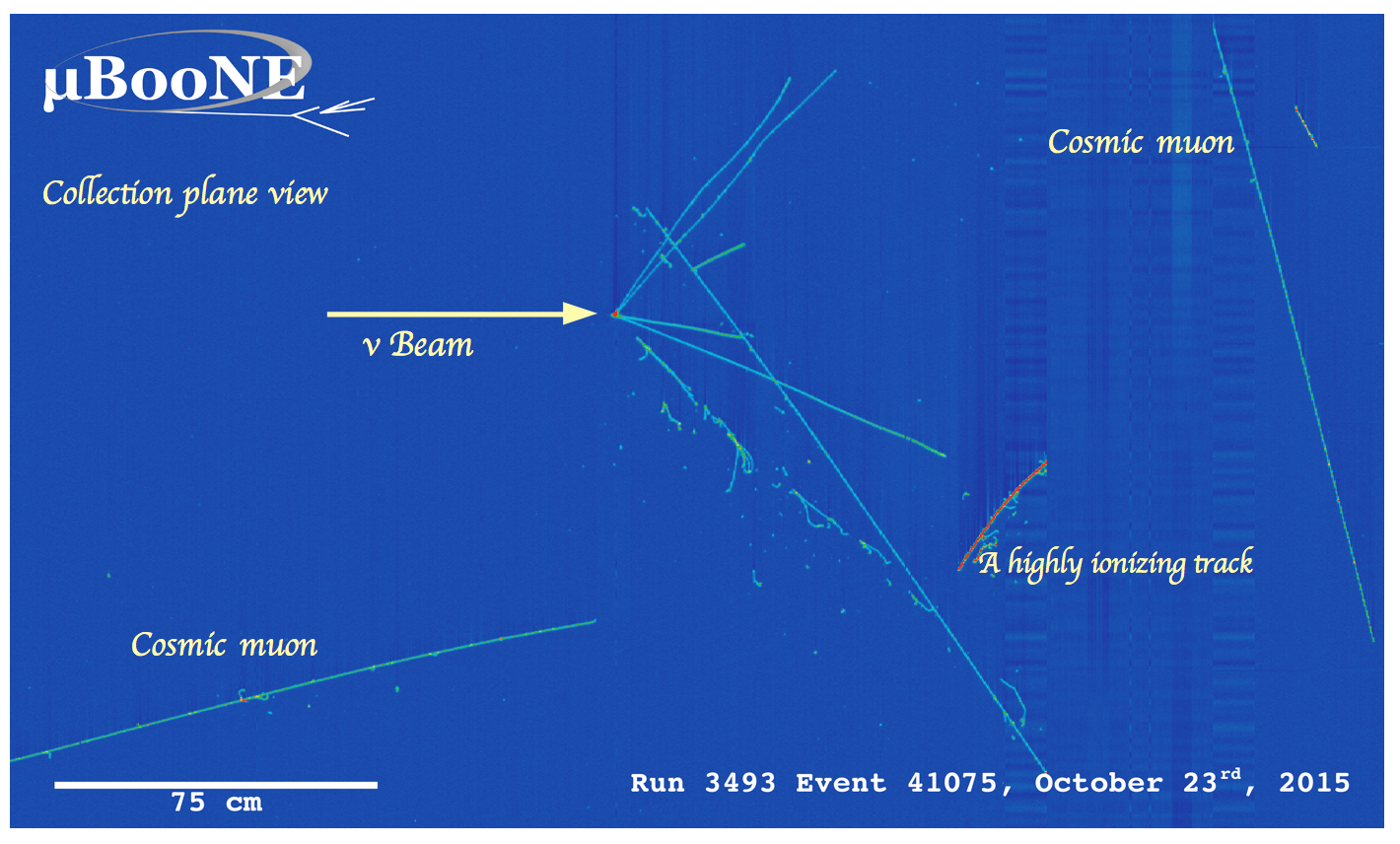

Neutrino interaction candidate in MicroBooNE liquid-argon (LAr) TPC detector. The detector consists of three wire planes where each records one 2D projection image. This image is from one of three wire planes [source and credits due to MicroBooNE experiment].

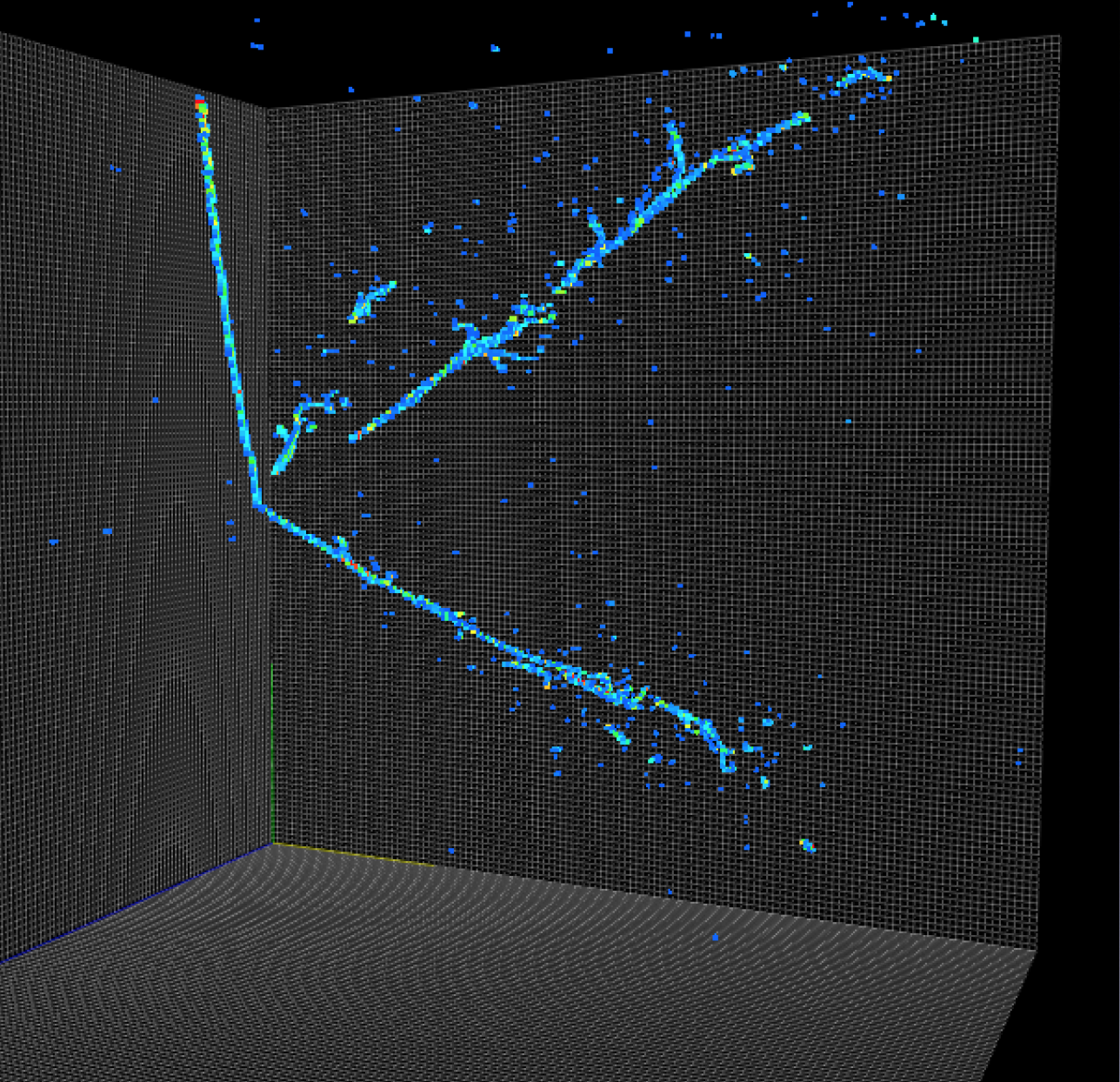

While TPCs are very powerful detector technology, understanding the detector response and analyzing its complex and highly detailed (= huge in size) data is challenging. Raw data from a typical TPC is either 2D or 3D array of digits. While there is more than one type of TPC, they can be all classified into two categories in terms of its data representations. One is a "wire detector", and the other is a "pixel detector". The former consists of multiple wire-planes that record 2D projection image of particle trajectories. An example of this detectory is MicroBooNE, SBND, ICARUS and DUNE far detector. The above example event display from MicroBooNE gives you an idea. These wire planes have different 2D orientation angles, and data from all planes can be put together to reconstruct the 3D particle trajectories. You can see an instance of 3D reconstruction result using WireCell from MicroBooNE below.

The other type, a "pixel detector", is slightly different. The detector records 3D particle trajectories as a series of a simple 2D static image just like how we record a movie with our cell phone. In this case the raw data is naturally 3D by simply stacking images along the time-axis, and requires no data reconstruction to just obtain 3D image data. The hardware challenge to make a pixel detector possible is, however, much harder than a wire detector. A pixel detector is employed in NEXT experiment, and is planned to be used for DUNE near detector. Below you can find an example particle trajectories in a pixel detector from simplified simulation.

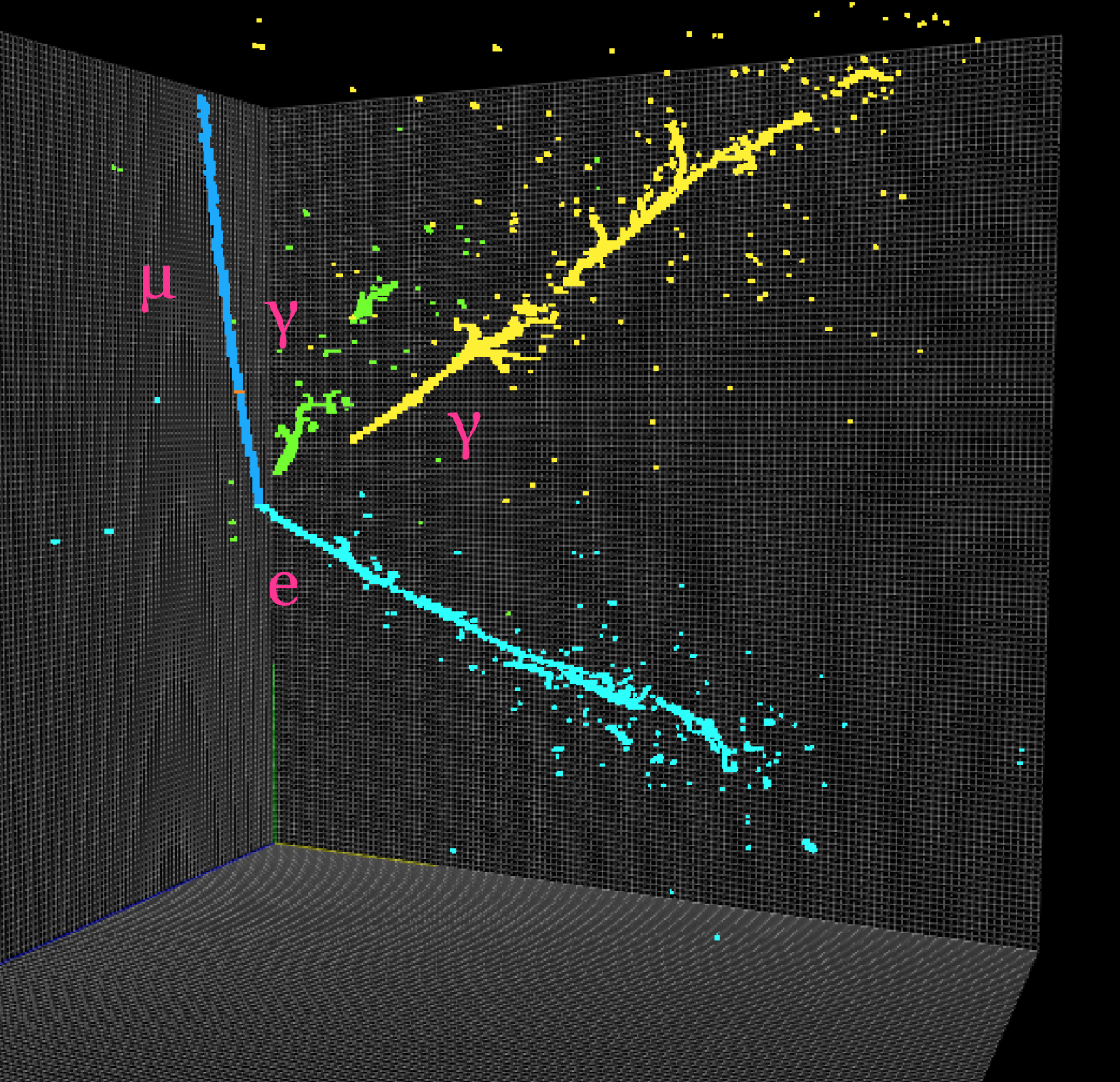

Left: simulated (non-neutrino) interaction in 3D volume with 2 gamma rays, muon and electron. Right: clustered charge deposition by particle instance (different colors indicate separate clusters). Data processing and visualization using our larcv software toolkit.

So given that (very brief) description of data, what do we want to use ML for? The common physics goal for (so far) all involved experiments is simple: we want to isolate a "signal interaction" that can induce arbitrary number of particles by identifying individual particle trajectory with deposited energy in the detector. In particular here's a list of specific items our group is working on.

We apply deep convolutional neural networks to 2D image and 3D volmetrics data to extract interesting feature information. We have a successful demonstration of object detection network to localize neutrino interaction published as the first MicroBooNE collaboration paper. We have applied 2D semantic segmentation (pixel-level object categorization) network which result was shown at WIN 2017 conference. Recently we showed a demonstration of segmentation technique in 3D detector. Ultimately we aim to perform vertex position estimation and clustering of energy depositions at a particle-instance level. You can find the example in the above right figure of 3D particle simulation.

TPCs are ultimately 3D imaging detector, and the reconstruction of precise 3D points in a wire detector remains as a challenging task. Our group members are studying how this can be solved using deep neural networks in a way training a network to learn cross-plane pixel-to-pixel correlation. This technique, once established, can predict 3D points from correlated 2D pixels of different projections. In addition, since it learns the pixel correlation, it can potentially cluster correlated 3D points together, enabling an instance-level particle clustering.

In general simulation tools in our field (particle physics) is quite good. We simulate individual particle interactions to simulate the whole world, which is computationally expensive "bottoms-up" approach. While this technique is powerful the simulation can never be perfect and there remain subtle discrepancies with real detector data. This is a problem to many of our applications that rely on supervised learning with simulation sample. In our group we are studying ways to identify such discrepancies in terms of simulation nuisance parameters and to quantify the magnitude, essentially systematic uncertainties, for physics analysis applications.